Complexity: From Suck, Bang, Blow to Norb's Cybernetic Manifesto

A generalized understanding of the dynamics of any system allows for predictive power. Predictive power allows for appropriate adaptation to not only survive but thrive and flourish into the future. Humanity has been busy building such systems to aid our collective development. When constructing new systems to aid our material survival humans seek to control for all sorts of variables that could decrease the likelihood of the success of the system. The mechanistic nature of such simple systems allows for algorithmic means by which future outcomes can be calculated and potentially course-corrected.

Suck, bang, blow: the internal combustion engine credo. The process of intake [suck], compression & power [bang], and exhaust [blow]. As the piston moves down, it uncovers the intake port, allowing the fuel-air mixture to enter the chamber. On the upward stroke, the mixture is compressed as the piston covers the intake and exhaust ports. Ignition of the compressed mixture forces the piston down, turning the crankshaft and generating power. Finally, the upward stroke expels the exhaust through the uncovered exhaust port.

This cycle repeats rapidly to sustain continuous engine operation. If any one piece of the mechanism experiences impurity or failure an algorithmic diagnostic process can be applied, parts swapped, and the equilibrium restored. The sterile nature of closed simple systems of uniform inputs allows for the predictability of output. These linear systems, where outputs and inputs scale in direct proportion to one another often have straightforward techniques to find solutions such as Gaussian elimination for systems of linear equations or Fourier transforms for linear differential equations making them ideal for deterministic modeling.

In addition to building systems of our own, humanity is also been interested in the governing dynamics of systems that we inhabit. In the physical world titans of prediction such as Galileo, Kepler, Copernicus, and Newton were able to gain insights into the nature of our physical world by observing celestial bodies and attempting to predict their movements. Gravitation is defined [as far as we can tell] as an attractive force exerted between two objects proportional to their mass. Although gravity hasn’t been understood from first principles an even more disturbing concept arises from the mathematical incalculability of the n-body problem.

Newton’s three-body problem which concerned itself with three objects, typically thought of initially as the sun, earth, and moon was a recognition that there are simply too many variables and too few equations relating them to provide a complete mathematical model of even a simple system of three. The analytically unsolvable equations although still deterministic rely heavily on arithmetic means of prediction and are subjected to an essence of chaotic character.

Away from the world of pure mathematics, natural systems that we experience are open, extraordinarily interconnected, and interdependent giving rise to complexity. Complex systems display a wide variety of features but most notably they are adaptive, relatively robust, and extremely rich in their nuance. Learning to describe and better model complex systems such as our own biology, social interactions, the climate and countless others results from systems ripe with nonlinearity, emergence, spontaneous order, adaptation, and feedback loops.

In the language of computer science and large modeling, the Curse of Dimensionality refers to the challenges that arise when dealing with high-dimensional data. As the number of features or dimensions in a dataset increases, the amount of data required to adequately sample the space grows exponentially. This phenomenon leads to problems such as sparsity of data, increased computational complexity, and overfitting in predictive models. In high-dimensional spaces, distances between data points become less meaningful, making it difficult to discern patterns or make accurate predictions. This poses significant challenges for predictive modeling, as many real-world datasets are inherently high-dimensional. Overcoming the Curse of Dimensionality often involves dimensionality reduction techniques, such as principal component analysis or feature selection which in turn often have their tradeoffs associated with them. Similarly, ask a researcher like Steve Koonin how well modeling of something as complex as climate has gone.

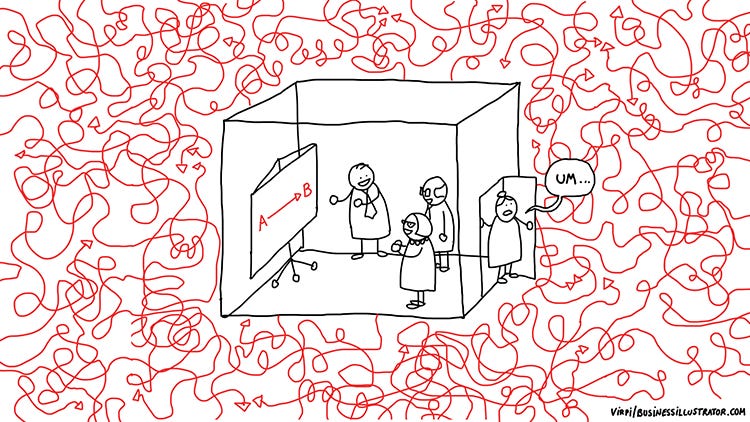

Humanity’s hubris has been to degrade the complexity of many such systems in an attempt to harness the same predictive power over them as our beloved internal combustion engines. Our ever more pressing attempts at reductionism have left us grappling ever more for answers to P versus NP problem without pondering the question why is it that we are asking the question in the first place? Our perception as individuals and groups is constantly changing. With innumerable variables dancing across landscapes of every shifting complexity, even the most sophisticated computers can’t determine the specific perception that will arise in individuals or groups. Often those systems with the largest Kolmogorov complexity are those that provide the basis of all that we hold dear.

Those among us who seek to wield influence over other bodies within the larger systems we inhabit such as our socioeconomic political theater and beyond will attempt to reduce complexity by any means necessary. Constrain and bind a system into something more palatable, easily understood, and influenced. Typically a reduction in the diversity or competition within a system is a good place to start. In biology, we call it Simpson’s diversity index, the effective number of parties in the realm of political science in economics the Herfindahl–Hirschman index of market concentration and competition.

Consider the dynamics of a society: when individuals' freedoms are constrained, their range of possible actions becomes limited, resulting in a more homogeneous, uniform social landscape. Similarly, in mathematical systems, as complexity decreases, the range of possible states or behaviors narrows, leading to a more predictable system. A Nash equilibrium is a situation where no player could gain by changing their own strategy a state where each body within the system has been optimized against all other bodies and stagnation and sterility result.

Conversely, over-indexing on features that in balance yield complexity can spell disaster for the system too. Hyper-individuation, hyper-diversity, hyper-connectedness, and hyper-interdependence can all result in the loss of complexity within an ecosystem. The connection between loss of complexity in mathematics and loss of freedom in society lies in their shared implications for variability and diversity. In both cases, a reduction in complexity or freedom leads to a loss of diversity and options.

The godfather of cybernetics, Norbert Weiner, wrote in his The Human use of Human Beings Cybernetics and Society:

As entropy increases, the universe and all closed systems in the universe, tend naturally to deteriorate and lose their distinctiveness, to move from the least to the most probable state, from a state of organization and differentiation in which distinctions and forms exist, to a state of chaos and sameness. In Gibb’s universe order is least probable, chaos most probable. But while the universe as a whole, if indeed there is a whole universe, tends to run down, there re local enclaves whose direction seems opposed to that of the universe at large and in which there is a limited and temporary tendency for organization to increase. Life finds its home in some of these enclaves.